Abstract

There has been significant progress in emotional Text-To-Speech (TTS) synthesis technology in recent years. However, existing methods primarily focus on the synthesis of a limited number of emotion types and have achieved unsatisfactory performance in intensity control. To address these limitations, we propose EmoMix, which can generate emotional speech with specified intensity or a mixture of emotions. Specifically, EmoMix is a controllable emotional TTS model based on a diffusion probabilistic model and a pre-trained speech emotion recognition (SER) model used to extract emotion embedding. Mixed emotion synthesis is achieved by combining the noises predicted by diffusion model conditioned on different emotions during only one sampling process at the run-time. To control the intensity, we apply the Neutral and specific primary emotion noise combined in varying degrees. Experimental results validate the effectiveness of EmoMix for synthesizing mixed emotion and intensity control.

Model Architecture

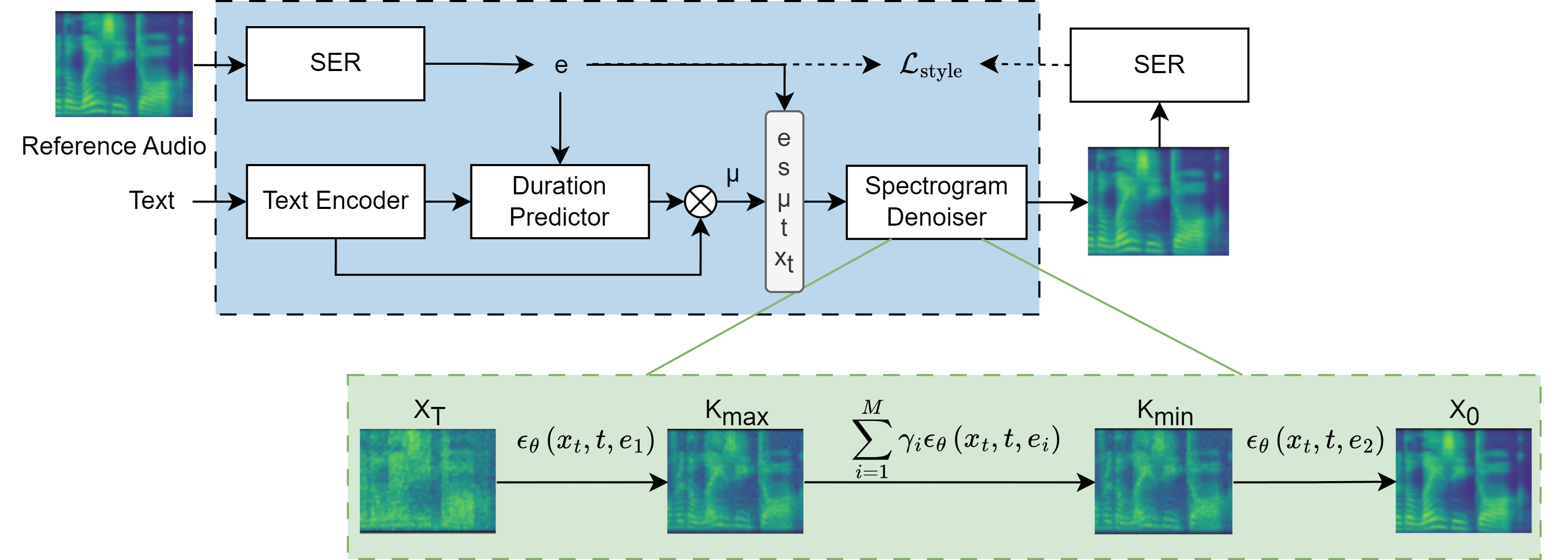

The overview architecture for EmoMix and the green part represents the extended sampling process of mixed emotion synthesis at run-time. The dotted arrows are only used during training. The SER mdoels are pre-trained and their parameters are frozen.

The overview architecture for EmoMix and the green part represents the extended sampling process of mixed emotion synthesis at run-time. The dotted arrows are only used during training. The SER mdoels are pre-trained and their parameters are frozen.

Experiment

Mixed Emotion Synthesis

We use the formula “Mixed Emotion = Base + Mixed-in” in following table to represent mixed emotions for easy understanding.

| Disappointment = Sad + Surprise |

|---|

| Outrage = Angry + Surprise |

| Excitement = Happy + Surprise |

| Mixed Emotion | Base | Mixed-in | Mixed Emotion |

|---|---|---|---|

| Disappointment | |||

| Disappointment | |||

| Outrage | |||

| Excitement |

Primary Emotion Intensity Control

We apply Neutral and primary mixture to control primary emotion intensity.

| Emotion | Weak | Medium | Strong |

|---|---|---|---|

| Neutral-Surprise | |||

| Neutral-Happy | |||

| Neutral-Sad | |||

| Neutral-Angry |